Projects

Some projects I’ve worked on, listed in order of most to least recent start date.

Caiman

Aug 2023 - June 2024

Eta Compiler

Spring 2023

Project Oort

Jan 2022 - June 2023

Excursion Through C++

Summer 2021

SAPL Interpreter

Summer 2021

Cornell Mars Rover

2020 - 2023

KFBR

Fall 2017

Caiman

Caiman is a language that simplifies design space exploration and optimization of heterogeneous programs. This is a research project I worked on with Dietrich Geisler and Oliver Daids at Cornell in the CAPRA group led by Adrian Sampson. Image credits go to Dietrich. Read more about it here and here.

Eta Compiler

As part of a significant course project, I worked in a group of 4 to implement an optimizing compiler for a C-like language Eta which would eventually get extended to become Rho. It compiles into x86_64 and we only got around to supporting the System V calling convention.

We implemented our compiler in Java (although one of my teammates and I were tempted to use Rust) using the CUP parser generator and JFlex lexer generator. The result ended up being over 20,000 lines of code (excluding tests in eta/rho itself).

The project itself was tons of fun and I meant to expand upon it over the following Summer but got a bit caught up with internships and such. We ended up doing fairly well, and although we didn’t podium in the final competition, we did get a high score based on correctness, completion, and style with the original test suite the course staff used for grading.

One thing I think we did well was our tiling implementation. We used

dynamic programming for optimal tiling and a double visitor hierarchy which

essentially boiled down to a form of triple method dispatch so that we can

easily create tile patterns to match subtrees in the IR AST. This is

because we had one set of visitors visiting the pattern tree, another set

of visitors visiting the AST, and a final aggregate visitor visiting both trees

simultaneously. I suppose the challenge really came from striving for utmost

reusability and cleanliness in a language without pattern matching.

We composed patterns

and developed template patterns to easily add new instructions with minimal effort and

without code duplication. One such template pattern matches any

memory expression and converts it to the most optimal x86 addressing mode it can.

So MEM(X + Y * 4) becomes [X + Y * 4] while MEM(X + Y * Z) would have to

become something like

mov _t0, Y

imul _t0, Z

mov ..., [X + _t0]

Then, when building tiles for something like an add, we could construct a pattern tree like:

ADD(

ARG_PATTERN1,

ARG_PATTERN2,

CommutativePattern,

MaxOneMemoryAccess

)

Another part of the compiler I was proud of was our implementation of Chaitin-Briggs register allocation. We could have implemented Linear Scan register allocation, but I thought it would be tons of fun to implement Chaitin-Briggs. So that’s what I did. I very loosely followed the discussion in Professor Appel’s Modern Compiler Implementation in Java. It took a few attempts but after getting it working without Register Spilling, I was quite surprised to discover I managed to add that final part of the algorithm on the first try.

Unfortunately, with 5 hours until the deadline, while running a provided Mandelbrot fractal test program, I discovered that there was some error that we didn’t catch in all our unit tests. We tried going through the 5,000+ lines of assembly our compiler generated and isolating suspicious code into unit tests, but we were unable to find the bug in time. :(

Some things I worked on:

- x86 Instruction selection, and optimal tiling

- Chaitin-Briggs Register Allocation with move coalescing

- conditional copy and constant propagation, dead code elimination, live variables analysis, local value numbering

- Syntax-directed IR translation and type-checking

- Parser specification

Notable college era projects also include my BRIL compiler passes and GC, Bear Compiler Fuzzer, and Parallel Register Allocator.

Project Oort

Project Oort is a third-person space shooter game implemented in over 20k+ lines of Rust with OpenGL. You control a starfighter that must battle AI enemies in a zero-gravity asteroid field while managing the ship’s energy and shield.

Your ship is armed with a photon cannon, cloaking, and a gravity tether that allows you to swing from or pull asteroids or other ships.

There’s no deep aim of the game, the goal is to shoot down your enemies without getting shot down yourself. Note that, there is no atmosphere and therefore there is no drag that will slow down your ship. The ship moves at constant velocity unless accelerated or decelerated.

Controls: Left click to fire. Right-click to fire a grappling shot.

T to turn invisible. W, S to accelerate forwards or backward,

and mouse movement to control the rotation of the ship.

Shield: You have a shield, denoted by the blue number at the top left. If this goes to 0, your ship is destroyed and you will respawn somewhere randomly on the map. Your shield will regenerate slowly over time.

Energy: Denoted by the yellow number in the top left, your energy is necessary to fire lasers and accelerate your ship. Your energy will regenerate slowly over time.

Minimap: In the bottom right, you have a minimap to see where you are relative to lasers and asteroids. The minimap is a 2D projection of 3D space which is “top-down” respective to your camera angle.

Grappling Hook: You can fire a “hook shot” by pressing and holding right-click. Once landed on an object, a tether will be formed between the target object and the shooter. The distance between the shooter and the object will not exceed the distance between them when the tether was first formed. The amount a tethered object moves to ensure this depends on the momentum of each tethered object. To release the tether, release right-click.

Summary

Technical Implementations:

- A Forward+ 1 physically based rendering engine that supports area lights, cascading shadow maps, soft shadows, animated models, and ray-marched volumetrics

- A 3-phase collision detection system utilizing an Octree, Bounding Volume Hierarchy of Oriented Bounded Boxes, and triangle intersections parallelized with compute shaders. You can read more about this here.

- A behavior tree AI and a modification of A* for pathfinding

- Rigid Body Simulation with rotational motion

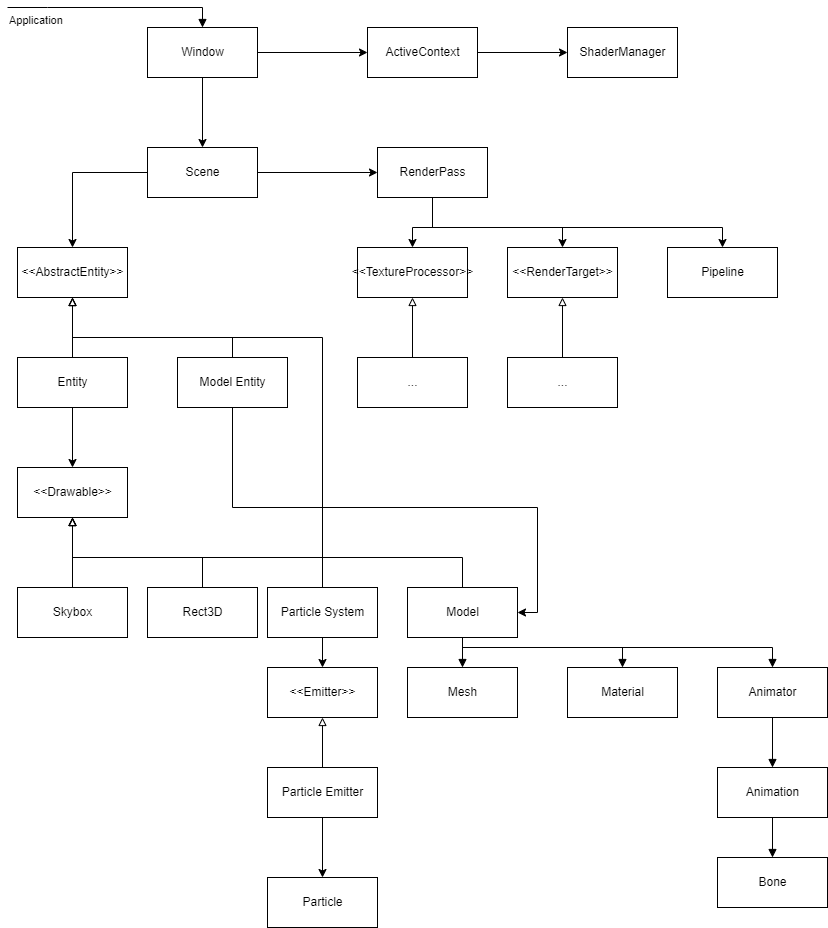

Graphics Engine

The graphics engine was designed, from the ground up using OpenGL.

The general structure is built on a pipeline system of RenderPasses,

RenderTargets and TextureProcessors. A RenderTarget is, well,

a render target where objects are drawn to and (typically) produces a texture.

A TextureProcessor is essentially a function on textures that takes input textures

and may produce an output texture. To pass extra state between stages, each stage has

read/write access to a pipeline cache.

A RenderPass, strings together multiple RenderTargets and TextureProcessors,

ordering them at the discretion of the Pipeline, which holds

a dependency graph of the stages in the RenderPass. The Pipeline is defined

by the user via a custom Rust DSL.

A Scene has a list of Entitys and RenderPasses. Each Entity can define

general properties that a RenderPass can access to render the Entity properly,

such as its required render order. A final Compositor can compose the results

of multiple RenderPasses together.

I have a blog post about how my graphics engine handles transparent objects here. Below is a diagram of the relevant architecture:

Collision Detection

I have a very detailed blog post about this here.

Physics Engine and AI

A rigid body simulation is used to handle the physics and collision resolution. Each rigid body is given a manually assigned mass or a manually assigned density. In the latter case, we can compute an estimated mass using the volume of the body’s bounding box. For each rigid body, we estimate an inertial tensor based on the vertices of the Rigid Body’s collision mesh.

Then at each step of the simulation, we determine the magnitude, direction, and point of application of the forces that are applied to each object. We then use the impulse-momentum theorem to compute changes in velocity. We subtract the point of application from the object’s center of mass to estimate a lever arm and compute an applied torque for the object. Using this and the inertial tensor, we compute a rotational angular velocity. I chose not to handle rotational velocity updates quite the same for the user-controlled ships for now, because it made the controls upon colliding with something feel unintuitive (ie. you lost control as the collision would impart a torque, rotating your ship).

For collision resolution, we compute a point of contact by averaging the centroids of colliding triangles and an impact force based on the momentum of colliding bodies.

The basic premise of the grappling hook is that if the two objects connected by the “cable” are too far apart, we essentially update the velocities of both objects by treating the cable being pulled as an elastic collision.

For the AI, a behavior tree is used to control the non-player enemy in the game. The behavior tree is built up of Sequence, Fallback, and ParallelSequence control nodes and custom action nodes for moving and firing. Pathfinding is done using a 3D implementation of A*, by tiling the 3D space into little cubes. Once a path has been computed, a simple local navigator just follows the path in segments of straight lines.

Source

Excursion Through C++

I wanted to compile a C++ reference for software subteam members of Cornell Mars Rover that covers (quickly) most of what one would possibly need to know. I already did something similar during the Winter of 2020, however, I wanted to make a document easier on the eyes, add more information, and make it more accessible.

The result is a guide, Excursion through C++, which comes with a few exercises and an example project. It did not end up serving its intended purpose, but it has been useful for my own reference from time to time.

It was a fun project, but I’m sure that it contains inaccuracies or mistakes in places.

Topics touched upon include:

- RAII, Interface and Reuse Inheritance, OOP Tips

- Noexcept, strong, and basic exception guarantees

- Smart Pointers

- Move Semantics, expression taxonomy

- RVO, NRVO

- Templates, SFINAE, type deduction, parameter packs, fold expressions

- Policy-based design, CRTP, TMP

- Threads, locks, mutexes, atomics, atomic memory orders

- C++20 features including concepts and coroutines

- Doxygen, GDB, CMake, Sanitizers, Valgrind, Unit testing with GTest, TDD, design patterns, GProf

Source

SAPL Interpreter

Shiny Alpaca Preprocessing Language (SAPL) is a dynamically typed language based on Rust, OCaml, Python, and C++. The name was chosen since shiny is an antonym of rusty, as in Rust, and the alpaca is somewhat similar to a camel, as in OCaml. The preprocessing language part was because originally the plan was for SAPL to be a preprocessor to any arbitrary language such as C or Javascript.

The language has features of imperative languages such as loops, classes, interfaces, and mutable arrays, but also has many functional features like pipelining, lambdas, and partial application.

I started this project after taking a class in functional programming, where one assignment was to build an interpreter for the language JoCalf. This project essentially “extends” JoCalf but with a modified syntax and is implemented in Rust.

The big takeaway from this project is to really think about the syntax and semantics of language features. I kind of added stuff that I thought would be cool without much thought, leading to certain combinations of features interacting in slightly strange ways. Notably, the intersection of lambdas, universal function call syntax, partial application, and class members can combine in a somewhat strange way.

Interesting Implementations:

- Universal function call syntax

- Checked precondition and postcondition expressions

- Iterators

Source

Cornell Mars Rover

I’ve been a member of the team since my first year at Cornell and was the Software Subteam lead for the 22-23 school year. For my senior year, I’ve decided to take a bit of a backseat role to make sure I have time for other things I want to do this year such as research.

We compete every year in the University Rover Challenge, held in Utah.

During my time on the team, I:

- Led a team of 12 to iteratively develop software for a rover using ROS (Robot Operating System), C++, and Python

- Redesigned the codebase to focus on code quality, maintainability, documentation, testing, readability, and reliability

- Created a runtime fault handling and dependency management system, and wrote a 300+ page Modern C++ reference guide

- Improved the pathfinding algorithm to support the autonomous location of AR tags and traversal through goalposts

- Implemented a multithreaded hardware abstraction layer and a communication format to support greater message throughput to the microcontroller. This improved performance by over 400%, reduced code coupling, and drastically increased testability

ASCII HW Message Format (First Year)

The first thing I worked on was implementing the CS side of a new message format that transmitted messages to the microcontroller managed by the electrical subteam. The previous year, the team decided to switch from a binary to an ASCII representation for easier debugging.

Concurrent Hardware Abstraction Layer (First Year)

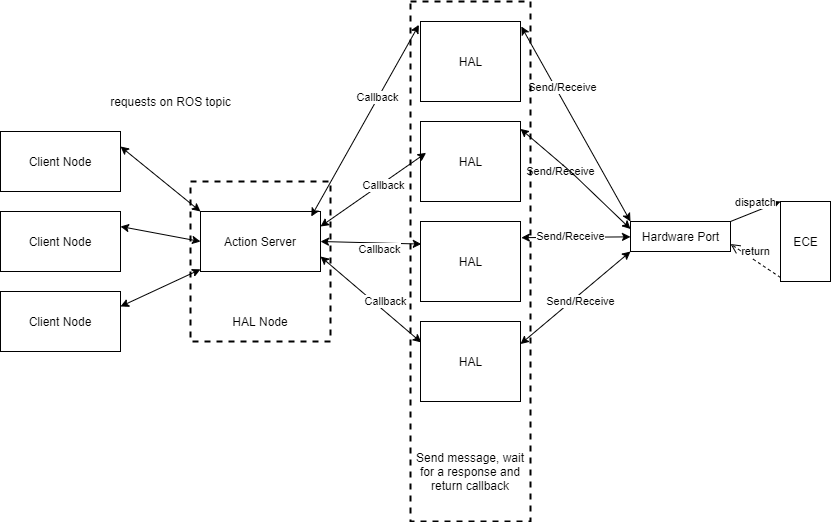

After the message format, I was tasked with improving message throughput so that our HW abstraction layer could handle the number of messages sent by the inverse kinematics controller for the arm, which was new the previous year.

To do this, my first thought was to use asynchronous programming (in fact, the project was dubbed Async HAL), however, this would require changing a lot of the code that interfaced with HAL which was written with sequential communication in mind. Since we used ROS, each module in our software was run in separate processes (known as nodes), which communicate over two-way channels known as actions. Therefore, what I ended up doing was multithreading the action server with a thread pool that handles action requests on the HAL side so that many clients can send requests at once. So for each request, the action server would push the request onto a thread pool to be processed in parallel with other requests.

Of course, we still only had one port going to the actual HW. To work around this, I designed a new port interface for reading and writing data and a port decorator which made accessing a decorated port thread-safe. This was achieved by having all calls to write to the port copy the message into a shared buffer and having a single thread handle sending the buffered messages to the HW. Reading was done similarly but in reverse with a single thread reading the port and distributing the messages to the correct client threads by matching message IDs of the SW requests and HW responses.

The new port interface also allowed SW testing. All we had to do was create a port that would read and write a memory buffer, and the complex logic of the decorator could be tested by decorating the memory port.

Things did not go smoothly though. I stumbled through bugs caused by race

conditions for over three months where I was a slave to the green PASSED

and red FAILED messages of my unit tests. I don’t remember what eventually fixed it,

but I think it had something to do with calling a function

that, unbeknownst to me, yielded control back to ROS.

Autonomy Refactor (Second Year)

During my sophomore year, I updated our autonomy algorithm to support passing through goalposts instead of just arriving near an AR tag. While doing this, I also refactored and completely reimplemented the algorithm using the State design pattern.

We use ROS to handle the obstacle avoidance and navigation to GPS coordinates, but it is up to us to implement the logic so that our rover can search for AR tags and navigate through goals.

Our algorithm revolves around a simple, yet very clever and effective idea that I won’t disclose.

I have some design documents and presentations I wrote describing this in more detail, but cannot share them here.

Fault Handling (Third Year)

As the subteam lead, I didn’t do a whole lot of implementation work during my third year. However, one thing I did do was create a decentralized fault-handling system.

The core idea is that we have a dependency graph of nodes. We never want a node to be running when something it depends on is not running, and we’d like no nodes whose sole purpose was to serve another node to be running when the system they exist to support is not alive.

To achieve this goal, when a node starts up, it activates its chain of dependencies in topological order and waits until all dependencies are running before continuing. On error, the node would do a similar process, notifying all nodes it supports that they must shut down and all nodes that support it that a depender is shutting down. After a few seconds, a fault handler would attempt to restart all of the shutdown nodes in topological order.

Subteam Lead (Third Year)

My main role was working with the other subteams on integration, assigning tasks, providing guidance, and other miscellaneous work.

This year, we were migrating from ROS 1 to ROS 2 which entailed reimplementing everything. We focused a lot more on using ROS libraries to avoid the extra work.

At the beginning of the year, I set up a lot of DevOps stuff:

- Doxygen-generated code docs

- Switched over to Clang, Clang-tidy, Clang-format

- Created formatting rules loosely based on Google’s style guide

- Established linting rules and a coding standard

- Created a Python unit testing library on top of Pytest

- Setup a CI and nightlies with Github Actions

I also introduced a new Agile-ish development model that focused on iterative development instead of the old, design-implement-test workflow that would delay testing until the spring. We used 3-4 week-long sprints, where I would help break up everybody’s projects into tasks that can be accomplished in a few weeks. I found it helpful to scope some tasks ahead of time in order to be able to provide resources and some insight into a direction to attack the problem. These changes, along with a greater focus on early SW testing in the simulation were well-received by the team.

We met for three, 2-hour work sessions a week along with independent work. While I was initially opposed to that much “oversight”, we found the designated work times to be really helpful to avoid getting too busy with other classes, and a set period where I am available to answer questions in person also proved useful.

KFBR

As a sophomore in high school, my favorite class wasn’t math or CS (although we didn’t have any CS classes at the time), but history. A huge part of that was my teacher for AP European History, who had a huge impact on me and whom I consider to be one of my role models to this day.

Well, in 2017 I decided I wanted to step away from high-level graphics libraries like SDL and Java Swing, and learn OpenGL. The culmination of my hard work was KFBR, a first-person multiplayer battle royale (recall Fortnite and PUBG just came out). KFBR, King Frederick’s Battle Royale was named after King Frederick I of Prussia, the military-obsessed father of Frederick the Great. Allegedly, on his deathbed bed Frederick I had his “blue boys” (soldiers) parade around him. The game was originally KHBR, named after King Henry VIII, but I felt that it would be more like Frederick I to host a Hunger Games-style battle royale.

One thing I wasn’t able to figure out was good collision detection and I settled for manually (and poorly) placed AABBs. However, I vowed to return to this problem and years later I did with Project Oort.

Some technical implementations:

- TCP server and client

- Blinn-Phong lighting model and shadow mapping from a directional light source

- Static model loading, skyboxes, blur

The code is an absolute mess, and I just keep this project here for an interesting data point in my historical archive. Other notable highschool era projects are Puzzle Solver and Activity Log.

Demo

Source